A lab at Rensselaer Polytechnic Institute in Troy, New York, is “opening the eyes” of its robots in a bid to improve humans’ quality of life.

“Hi, everyone. Welcome to Intelligence Systems Lab. Today we are going to show you a few demos. One of them is Zeno, which can mimic your facial movements, body gestures, and speeches. The other one is me, Pepper. I can guess your age, gender, expressions, and so on. Hope you enjoy the demos today.”

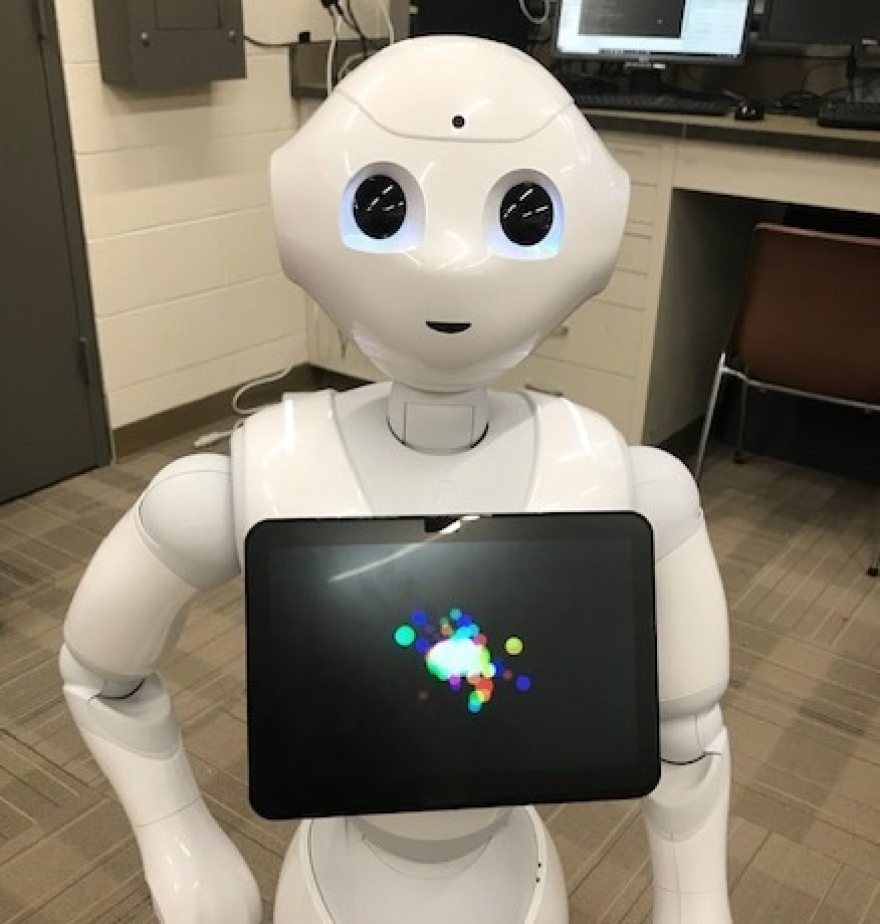

That’s Pepper – the bright, friendly robot that roams the Intelligence Systems Lab at Rensselaer Polytechnic Institute. Pepper is adorable with a small smile and gigantic eyes, but it’s also the result of years of research into what Lab Director and Professor Qiang Ji calls “computer vision.”

“Computer vision is a sub-area of AI, where we’re developing computing technology to equip the machine with perception capabilities," Ji explains. "But my specific research area is called ‘human-centered vision.’ So basically we want to develop a computer-vision technology to understand humans’ non-verbal behaviors.”

In other words: Pepper not only reacts to audio commands, like Amazon’s Alexa, but reads your facial expressions and body language. Ji envisions a future where machines “live” side-by-side with humans, both as research partners and in-home companions for older or disabled residents. But to get there, Ji says robots need vision to interact more naturally with humans.

“I think the machine and the human will coexist," he says. "And in order for that to happen, we have to build trust between machines and humans – otherwise humans will always treat machines as a machine, right? So now if the machine can respond in a very similar, natural manner like a human, then gradually there will be a trust.”

Zooming around the Lab, Pepper’s camera and programming allow it to identify the number of people in a room, and guess their age, gender, and expressions.

“I counted three male and two female," announces Pepper. "One person looked angry. One person looked afraid. One person looked sad. Two people looked neutral. Finished counting.”

Michael Li, a senior at RPI, demonstrates that Pepper is programmed to recognize and find specific people in a room. He says Pepper is happy to perform its estimates one-on-one – but you need to give it common courtesy.

“So it has eye-gaze estimation, so if I’m not looking at [Pepper], as a human you need to look at the other people to talk – so Pepper's response was ‘You need to look at [me],'" Li explains.

On a desk across the Lab, the small blue robot, Zeno, doesn’t have all of Pepper’s bells and whistles – but still takes things a step further. Designed as a companion for children with autism, Zeno mimics the facial expressions of the person in front of it using five motors in its cheeks, eyes, eyebrows, and mouth. Zeno can also mimic the “most basic” body movements – you know, like a boxing stance.

“You’re going down," Zeno declares.

Ji says there are many applications for computer-vision, beyond humanoid robots. He figures computer-vision can eventually detect diseases or assist drivers.

“We can put a camera in the dashboard, monitor the driver behavior, to see if the driver is focused on driving or not," he notes. "And we can detect fatigued driving, or we can detect distraction during driving. And we can also warn the driver if there’s some dangerous behavior.”

Using cameras to track their gaze, students in the Lab are able to select options from a screen and play “Whack-a-Mole” without moving an inch. Of course, the stars of the Lab are Pepper and Zeno. Ji says the physical technology for AI has been around for years, but increased computer power and Big Data are what’s driving the current “AI Revolution.” Li and Ji demonstrate Pepper has a limited range of knowledge – for now.

“So basically it has all this voice capability, just like the Amazon Alexa, but here we’re focused more on vision," Ji says. "So in the future we’re willing to combine the two.”

Critics have warned about economic impacts and unexpected consequences of AI, so if you’re getting vibes of increased automation and “robots taking over the world,” you’re probably not alone. But Ji says that’s a common misconception of AI.

“I believe that the purpose of AI is not to replace humans," he notes. "It is more important to augment humans – to augment humans’ physical capabilities, to augment humans’ mental capabilities, and to improve humans’ life.”